ETL Testing – A Complete Guide

In this post, we will learn about ETL Testing (Data Warehousing Testing) along with the following

What is ETL?

ETL stands for Extract Transform and Load. Extract, Transform, & Load are the three database functions that are combined into one tool.

ETL tools pull data (extract) from one database and convert (transform) and store (load) into another database. ETL tools are used to build a data warehouse.

Extract: It is the process of reading data from a database

Transform: It is the process of converting the extracted data from its original form into the form it needs to be stored into another database.

Load: It is the process of loading the data into the target database

In other words, the ETL process produces data from multiple sources to make a Data Mart, Operational Data Store, Data Warehouse, Data Hub, or Data Lake.

What is ETL Testing?

When we talk about ETL testing, it is a validation and verification of Data, its completeness, its uniqueness, and data integrity.

The ETL process is used in data warehousing, and it became popular in the 1970s. ETL system integrates data from different systems, which can be on different platforms, different hardware, and can be different vendors.

ETL testing validates the data movement from source to destination and verifying the data count in both source and destination and verifying data extraction, transformation, and also verifying the table relations.

What is Data Warehousing?

Collecting, storing, and managing various kinds of data from ‘n’ no. of different sources and providing meaningful perceptions and prediction to the business is called Data Warehousing.

Data warehousing is an essential entity of BI (Business Intelligence) Systems, which deals with the analysis and reporting of Data.

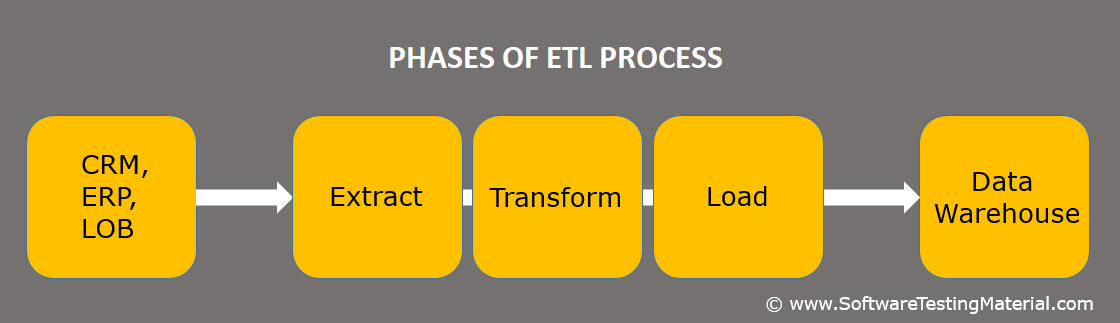

ETL Process

ETL Process is divided into 3 phases

Extract Data

As the first step, data is extracted from multiple sources into a staging area.

Sources can be following or a combination of all

- CRM(Customer Relationship Management software) like Salesforce, Zoho, HubSpot, Microsoft Dynamics, etc.

- ERP(Enterprise Resource Planning) like Microsoft Dynamics, Oracle Ebusiness Suite, NetERP, Lawson software, SAP Business one, etc.

- LoB(Line of Business) applications like any accounting, supply chain management, and resource planning applications

The staging area is used to extract the data from different sources in different formats and to clean and organize the data. The staging is a middle place where source data is kept temporary.

Data can be in Structure format (Database, XML, Excel, CSV, etc) or Unstructured format(Email, Webpage, etc, Screen Scrapping data)

More examples of data source formats are – JSON, Relational databases, Flat files, Virtual Storage Access Method (VSAM), IMS(Information Management System).

Transformation

Transformation is the second step of ETL where multiple rules and functions are applied to extracted data and to create data in a single format.

The objective of the transformation process is to convert multiple sources and multiple formats of data into a single system format.

The transformation process also validates the data to confirm the data correctness.

For example, if certain data should have a specific pattern or some default values and if the data pattern of the default value is not found then data is rejected and reported back to the source system.

During transformation, data goes through the following sub-processes

- Cleaning (Removing, or deleting error or inconsistency of data to improve data quality)

- Filtering (selection of relevant rows or columns)

- Joining (linking of relevant data of multiple sources)

- Sorting (sorting in desired order)

- Splitting (Splitting data into columns)

- Deduplication (finding and removing duplicate records)

- Summarization (data is collected and stored in a summarized format. For example total sales in a particular year)

- Data validation: Rejecting the data which is missing some default value, or predefined format.

- Derivation: Business rules are applied on data, and checked for validity, if found incorrect then its returned back to the source.

Once the data is transformed by applying all the above methods, data become consistent and ready to load.

Load Data

The load stage depends on the Business Requirements or on the usage of the data.

Given below are some requirement examples

- Data is going to utilized for analysis purposes.

- Data is utilized to give search results.

- Data is utilized for the Machine learning algorithm.

- Data is utilized for some real-time application

Depending on the data utilization purpose, data is loaded to the data warehouse with the desired format of the business. It can be a batch load or a full load depending on business needs.

When data load happens, all the triggers get activated on the targeted database, for example, referential integrity, mandatory field checks, uniqueness of data.

These triggers again validate the quality of the data before loading the final data into the data warehouse.

For example, a business needs records of all customers who are taking financial services from different financial institutions. The job of Data warehousing is to provide this data into a uniform and single format as data extracted from different systems is in different formats.

ETL process simplifies and normalize the unstructured data, and load into a single format data.

Some times, ETL is used to transfer the application’s data to a new database, ETL helps to load the data from old database schema to new database schema.

ETL Testing Types

ETL testing is categorized in the following types.

- Testing of New System: In this, Data is extracted from different types of sources and loaded into the Data warehouse. So testing of Data accuracy, validity, integrity, and performance of the application can be done.

- Migration testing: To verify the data migration of a source system to the data warehouse with data integrity and no loss of data.

- Incremental Testing: It is done when there are some changes in the data warehouse like new data type is added in the Data warehouse database.

- Testing of Reports: When data is completely loaded into the Data warehouse, then testing of reports is done to check data quality, validity, business logic, and calculations

- Source to target data testing: To verify that there should not be any data loss or data truncation while loading the data from source to data warehouse.

- Performance testing: It is done to verify the data loading happens within the expected time and the users of the application are able to perform their job without any delay.

- GUI testing: This testing is done to check reports are showing up correctly to its end users.

ETL Test Life Cycle and Test Artifacts.

Just like any other software testing, ETL also follows a testing life cycle.

1. Understand Business Requirements

- Business requirement document

- Technical specification document

- Data mapping document.

- Database structure, application logic, and how data is used in the current system

2. Validate Business Requirements

- Cross-check each and every requirement with business analyst and clarify the doubts and issues related to data mapping.

- Cross-check logic of data transformation and validate that there is no gap

3. Estimate the Test efforts

- Estimate how much time is required for test planning, and test artifacts creation.

- As per the number of business requirements, high-level test execution efforts are estimated

- As per the overall project time, budget and team size, no. of testing days are estimated.

4. Test Planning

- Test methods and techniques used, tools used, and schedule of testing

5. Creating Test Artifacts

It includes test cases, test scenarios preparation, keeping the following key points in the mind.

- The structure of source data should be the same as on the final database.

- All the tables on the destination are updated including all referential data.

- The data model is correctly followed by the destination.

- Archival and purge strategy.

- Review the data dictionary.

- Data transformation rules.

6. Test data preparation

7. Test cases review

- Reviews are done on test cases by dry running these test cases

8. Test Execution:

Test case execution happens as per the schedule. Following are the steps in the ETL test execution phase

- Execute ETL jobs.

- Check that data load is done successfully on the target and data is valid

- Check the target and validate the data quality and correctness by executing SQL queries

- Check ETL tool logs, where it caused error or terminated abnormally.

- Execute the test cases

9. Bug Reporting Defect closure:

- Defects are logged and defect closure is done by the development team.

10. Sign off and Reporting:

- The number of test cases executed, status, and overall status is shared with the team. Sign off can be given if enough testing is done and bug count is close to zero, and no high severity issue is present in the system.

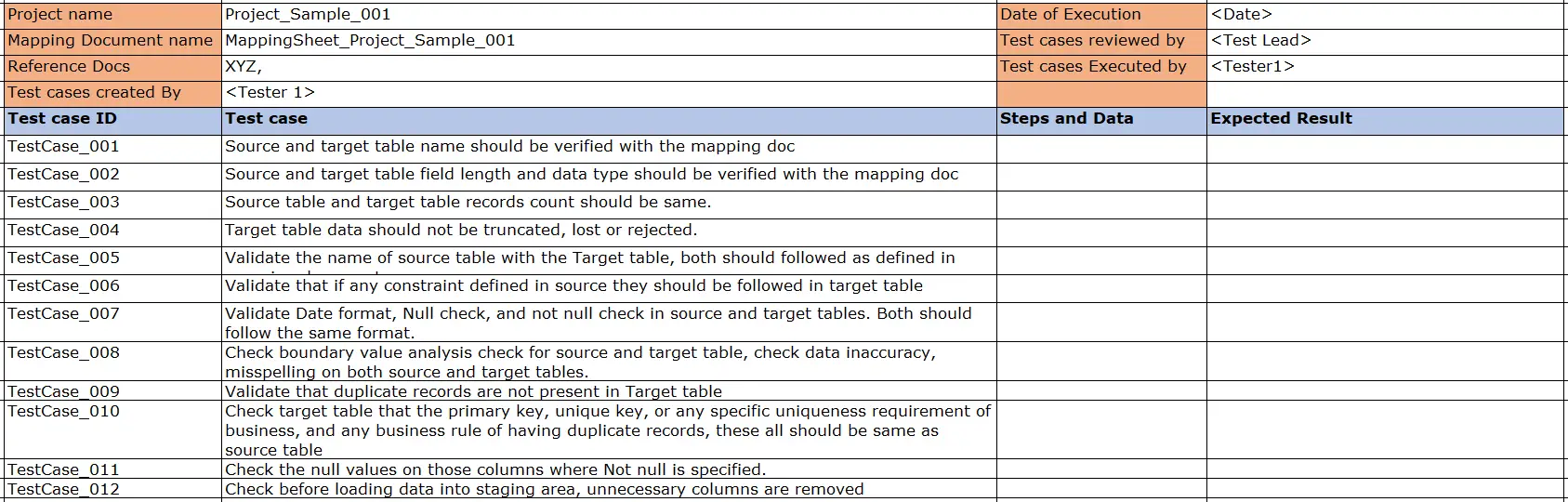

ETL Test cases (Sample ETL Test Case Template)

ETL mapping sheets and DB Schema of Source & Target are the key documents that are always referred by the ETL test engineer while creating test cases and scenarios.

ETL Mapping sheet has the following information

1. Each and every column of source and destination table

2. Reference table information of every column of source and destination table

Few examples of test cases.

- The source and target table structure should match with the ETL mapping sheet.

- Data type and data length at source and target should be the same.

- The Data field type format should match on both source and target tables.

- Column names should map with the ETL sheets on both source and target

- Constraints are defined as expected on the target as defined on the source.

- The record count is the same on source and target after data load happens.

- The date format is the same on the source and target tables.

- Check no duplicate records are found on target tables.

Bug types in ETL testing

- UI/Interface related bugs like – Usability, spelling mistakes, navigation, placement of UI objects, font style, font size, colors, alignment, etc.

- Issues related to Boundary value analysis – minimum and maximum value check.

- Valid and invalid data type issues

- Calculation bugs

- Performance or speed related issues.

- Business rule violation related issues.

- Record duplication bugs.

- Loss of data in some operation.

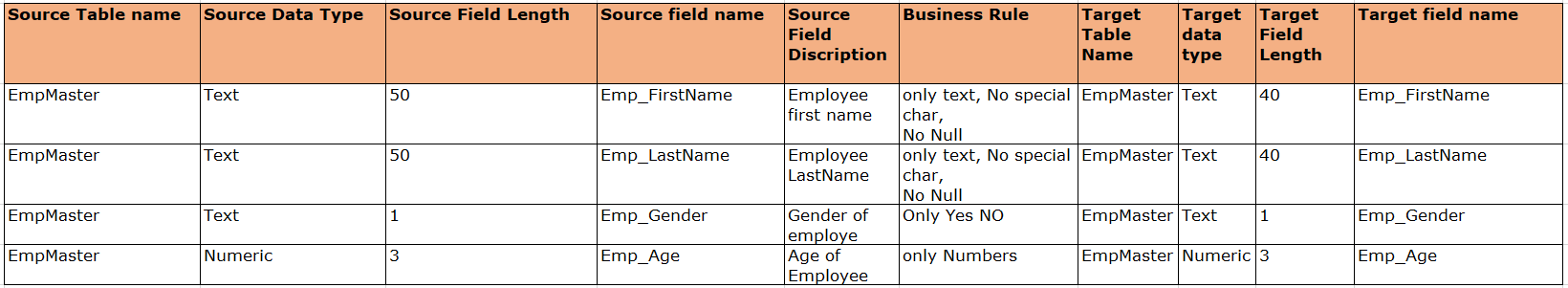

Sample Mapping Sheet:

ETL and DB testing difference

The objective of both testing types is Data validation, but these two are not the same.

The following are some differences between DB and ETL testing.

| ETL Testing | DB Testing |

|---|---|

| ETL testing is more focused on Data movement from multiple sources to data warehouse. | DB testing is focused on database rules and constraints testing of an applications database. |

| Verify data count in source and targeted databases. | Verify every column in the table has valid data values. |

| Testing is done to verify that data extraction, transformation and loading has done as expected. | It is done to check if application is performing CRUD operations correctly, and business needs are met. |

| Works on huge data, historical data and multiple sources are involved. | It is done on business transactional data. |

| In ETL, online analytical processing (OLAP) is used which execute complex queries to analyse historical aggregated data from OLTP systems. | DB uses Online transaction processing (OLTP), which works on real time database operations like creating, processing and storing of data on transactional basis. |

| Data type is not normalized, with more Indexes and aggregations, with less joins. | In DB testing data is normalized with more joins. |

| ETL testing is involved in data extraction, transformation, data load and finally verification of reports on the dashboard. | Database testing is to verify application logic is working correct, data operations are working correctly. |

| ETL testing is multidimensional testing. | DB testing is limited to business and application logic. |

ETL Testing Tools

ETL testing is done manually and also with automation tools, but manual testing is more error-prone and time-consuming. Please refer the link to get the best ETL tools list

How To Select ETL Software

In general, all the ETL tools extract, read, clean, and migrate large volumes of unstructured or structured data from different platforms, then load the single format data to a data store or data warehouse.

Modern ETL tools provide dashboards for data visualization, reporting, analysis, different types of chart views, robust security, collaboration, sharing, mobile view, and memory data.

Before choosing any ETL tool, the following are the criteria that should be checked.

- Multiple data formats support

- Data Profiling

- Supports data cleaning

- Metadata support

- Version control of the ETL maps

- Supports data manipulation

- Support for data mining models

- Provides Automatic documentation

- Support for analytical functions

- Error Handling

- Reading of Non-Structured data

- Pivoting

- Ease of Use

- Training requirement

- Easy Debugging

- Real-time connections supported

How to become an ETL tester and Skillset required.

The following are the skills required to become an ETL tester.

- Good at analyzing business requirements: To understand the Business process and design test cases.

- Good at Data warehouse test planning, UAT plans, test cases by referring on the ETL mapping document and functional design documents.

- Good at database concepts, and can write simple and complex queries on different databases and platforms.

- Experience in PL/SQL Queries: Should be able to perform the Back-End testing, check data consistency by designing and running Oracle PL/SQL queries on the database. Should be able to compare the data with the reports generated on the Data warehouse dashboard.

- Tested stored procedures and developed complex SQL queries for data testing.

- Good at data validation on the target database as per the business rules.

- Worked on flat files, web services, SQL Server, Oracle- extracting data from flat files and load into the target database.

- Worked on one or multiple ETL tools.

- Knowledge of database query tools like TOAD and SQL plus.

- Worked on different operating systems like Windows, Unix, Linux.

- Good at Webservices testing and XML validation, using any tool like SOAP UI

- Good at defect logging using any tool like quality center, Test reporting.

- Good at test summary reporting, signoff, retrospective meetings, and continuous learning.

- Good at functional, system, regression, Integration, and Performance testing.

- Involved in UAT planning and test designing.

With the above-mentioned skills, you can easily get ETL Testing Jobs.

ETL testing challenges

There are a few challenges that can hamper the ETL process if not dealt on time.

- Creating a source to target mapping document: if it is not done correctly in the initial stage, entire testing will produce false results. This gap leads to a big challenge for the entire ETL process.

- The requirement is not consistent and changes are occurring very frequently, due to this tester needs to change their scripts very often, and it becomes very slow and may affect the overall schedule of the ETL process.

- Sometime during data migration, data is lost or corrupted, and chances are high that it is overlooked from the tester’s eyes. This can make a data validation issue on the target database.

- Lacking in proper test estimation.

- Testing environment unavailability

- Huge data on Source system: sometimes it makes difficult to test on the target system due to large volume, low performance.

- ETL tool used: modern tools provide a lot of facilities for data validation but if the organization is stick on a traditional tool then it might difficult to test the data effectively.

Conclusion

ETL testing demand is very huge, and the future of ETL tester is bright.

ETL traditional tools like IBM Infosphere Information Server, Informatica, some demanding tools like AWS glue, Alooma, Stitch are gaining popularity due to some features they provide like real-time streaming, very rich dashboards, user-friendly UI, and many more data manipulation features.

The scope of ETL testing is increasing due to the demand for ETL automation in the market.

ETL testers are in need because data is becoming huge, and in this competitive age, every business wants to know the demands of users, the interest level of end-users, so that business gets ready to fulfill the needs of the users, to make timely decisions, they Need a consolidated data.

ETL is helpful in making advance decisions, forecasting for business growth. Top industries that are using ETL are Banking, Finance, Insurance, Health Care, and Information Technologies.

The following are the related posts that help you to get a better ETL Testing Jobs.

Related posts: